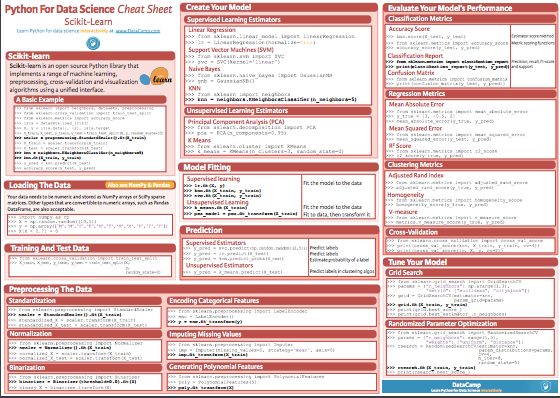

- Cheat Sheet - Machine Learning Models. January 15, 2019 — 14:00. Talita Shiguemoto. This is a summary of the models and metrics I learned in the Nanodegree Machine Learning Engineer from Udacity, in which I told my experience here. I added some models and libraries that I used at other times.

- Machine Learning Cheat Sheet Cameron Taylor November 14, 2019 Introduction This cheat sheet introduces the basics of machine learning and how it relates to traditional econo-metrics. It is accessible with an intermediate background in statistics and econometrics. It is meant to show people that machine learning does not have to be hard.

- Regression (Machine Learning) Cheat Sheet. The Machine Learning for Regression Cheat Sheet provides a high-level overview of each of the modeling algorithms, strengths and weaknesses, and key parameters along with links to programming languages. The Machine Learning for Regression Cheat Sheet is a key component of learning the data science for.

- Machine Learning Cheat Sheet Cameron Taylor November 14, 2019 Introduction This cheat sheet introduces the basics of machine learning and how it relates to traditional econo-metrics. It is accessible with an intermediate background in statistics and econometrics. It is meant to show people that machine learning does not have to be hard.

- Machine Learning Cheat Sheet Pdf

- Machine Learning Cheat Sheet Python

- Computer Learning Cheat Sheets

- Machine Learning Algorithms

- Azure Machine Learning Cheat Sheet

- Machine Learning Cheat Sheet Microsoft

Machine Learning For Dummies Cheat Sheet By John Paul Mueller, Luca Massaron Machine learning is an incredible technology that you use more often than you think today and that has the potential to do even more tomorrow.

This resource is designed primarily for beginner to intermediate data scientists or analysts who are interested in identifying and applying machine learning algorithms to address the problems of their interest.

A typical question asked by a beginner, when facing a wide variety of machine learning algorithms, is “which algorithm should I use?” The answer to the question varies depending on many factors, including:

- The size, quality, and nature of data.

- The available computational time.

- The urgency of the task.

- What you want to do with the data.

Even an experienced data scientist cannot tell which algorithm will perform the best before trying different algorithms. We are not advocating a one-and-done approach, but we do hope to provide some guidance on which algorithms to try first depending on some clear factors.

Editor's note: This post was originally published in 2017. We are republishing it with an updated video tutorial on this topic. You can watch How to Choose a Machine Learning Algorithm below. Or keep reading to find a cheat sheet that helps you find the right algorithm for your project.

The machine learning algorithm cheat sheet

The machine learning algorithm cheat sheet helps you to choose from a variety of machine learning algorithms to find the appropriate algorithm for your specific problems. This article walks you through the process of how to use the sheet.

Since the cheat sheet is designed for beginner data scientists and analysts, we will make some simplified assumptions when talking about the algorithms.

The algorithms recommended here result from compiled feedback and tips from several data scientists and machine learning experts and developers. There are several issues on which we have not reached an agreement and for these issues, we try to highlight the commonality and reconcile the difference.

Additional algorithms will be added in later as our library grows to encompass a more complete set of available methods.

How to use the cheat sheet

Read the path and algorithm labels on the chart as 'If <path label> then use <algorithm>.' For example: Hp pavilion w5000 specs.

- If you want to perform dimension reduction then use principal component analysis.

- If you need a numeric prediction quickly, use decision trees or linear regression.

- If you need a hierarchical result, use hierarchical clustering.

Sometimes more than one branch will apply, and other times none of them will be a perfect match. It’s important to remember these paths are intended to be rule-of-thumb recommendations, so some of the recommendations are not exact. Several data scientists I talked with said that the only sure way to find the very best algorithm is to try all of them.

Types of machine learning algorithms

This section provides an overview of the most popular types of machine learning. If you’re familiar with these categories and want to move on to discussing specific algorithms, you can skip this section and go to “When to use specific algorithms” below.

Supervised learning

Supervised learning algorithms make predictions based on a set of examples. For example, historical sales can be used to estimate future prices. With supervised learning, you have an input variable that consists of labeled training data and a desired output variable. You use an algorithm to analyze the training data to learn the function that maps the input to the output. This inferred function maps new, unknown examples by generalizing from the training data to anticipate results in unseen situations.

- Classification: When the data are being used to predict a categorical variable, supervised learning is also called classification. This is the case when assigning a label or indicator, either dog or cat to an image. When there are only two labels, this is called binary classification. When there are more than two categories, the problems are called multi-class classification.

- Regression: When predicting continuous values, the problems become a regression problem.

- Forecasting: This is the process of making predictions about the future based on past and present data. It is most commonly used to analyze trends. A common example might be an estimation of the next year sales based on the sales of the current year and previous years.

Semi-supervised learning

The challenge with supervised learning is that labeling data can be expensive and time-consuming. If labels are limited, you can use unlabeled examples to enhance supervised learning. Because the machine is not fully supervised in this case, we say the machine is semi-supervised. With semi-supervised learning, you use unlabeled examples with a small amount of labeled data to improve the learning accuracy.

Unsupervised learning

When performing unsupervised learning, the machine is presented with totally unlabeled data. It is asked to discover the intrinsic patterns that underlie the data, such as a clustering structure, a low-dimensional manifold, or a sparse tree and graph.

- Clustering: Grouping a set of data examples so that examples in one group (or one cluster) are more similar (according to some criteria) than those in other groups. This is often used to segment the whole dataset into several groups. Analysis can be performed in each group to help users to find intrinsic patterns.

- Dimension reduction: Reducing the number of variables under consideration. In many applications, the raw data have very high dimensional features and some features are redundant or irrelevant to the task. Reducing the dimensionality helps to find the true, latent relationship.

Reinforcement learning

Reinforcement learning is another branch of machine learning which is mainly utilized for sequential decision-making problems. In this type of machine learning, unlike supervised and unsupervised learning, we do not need to have any data in advance; instead, the learning agent interacts with an environment and learns the optimal policy on the fly based on the feedback it receives from that environment. Specifically, in each time step, an agent observes the environment’s state, chooses an action, and observes the feedback it receives from the environment. The feedback from an agent’s action has many important components. One component is the resulting state of the environment after the agent has acted on it. Another component is the reward (or punishment) that the agent receives from performing that particular action in that particular state. The reward is carefully chosen to align with the objective for which we are training the agent. Using the state and reward, the agent updates its decision-making policy to optimize its long-term reward. With the recent advancements of deep learning, reinforcement learning gained significant attention since it demonstrated striking performances in a wide range of applications such as games, robotics, and control. To see reinforcement learning models such as Deep-Q and Fitted-Q networks in action, check out this article.

Considerations when choosing an algorithm

When choosing an algorithm, always take these aspects into account: accuracy, training time and ease of use. Many users put the accuracy first, while beginners tend to focus on algorithms they know best.

When presented with a dataset, the first thing to consider is how to obtain results, no matter what those results might look like. Beginners tend to choose algorithms that are easy to implement and can obtain results quickly. This works fine, as long as it is just the first step in the process. Once you obtain some results and become familiar with the data, you may spend more time using more sophisticated algorithms to strengthen your understanding of the data, hence further improving the results.

Even in this stage, the best algorithms might not be the methods that have achieved the highest reported accuracy, as an algorithm usually requires careful tuning and extensive training to obtain its best achievable performance.

When to use specific algorithms

Looking more closely at individual algorithms can help you understand what they provide and how they are used. These descriptions provide more details and give additional tips for when to use specific algorithms, in alignment with the cheat sheet.

Linear regression and Logistic regression

Linear regression is an approach for modeling the relationship between a continuous dependent variable (y) and one or more predictors (X). The relationship between (y) and (X) can be linearly modeled as (y=beta^TX+epsilon) Given the training examples ({x_i,y_i}_{i=1}^N), the parameter vector (beta) can be learnt.

If the dependent variable is not continuous but categorical, linear regression can be transformed to logistic regression using a logit link function. Logistic regression is a simple, fast yet powerful classification algorithm. Here we discuss the binary case where the dependent variable (y) only takes binary values ({y_iin(-1,1)}_{i=1}^N) (it which can be easily extended to multi-class classification problems).

In logistic regression we use a different hypothesis class to try to predict the probability that a given example belongs to the '1' class versus the probability that it belongs to the '-1' class. Specifically, we will try to learn a function of the form:(p(y_i=1|x_i )=sigma(beta^T x_i )) and (p(y_i=-1|x_i )=1-sigma(beta^T x_i )). Here (sigma(x)=frac{1}{1+exp(-x)}) is a sigmoid function. Given the training examples({x_i,y_i}_{i=1}^N), the parameter vector (beta) can be learnt by maximizing the log-likelihood of (beta) given the data set.

- Group By Linear Regression

- Logistic Regression in SAS Visual Analytics

Linear SVM and kernel SVM

Kernel tricks are used to map a non-linearly separable functions into a higher dimension linearly separable function. A support vector machine (SVM) training algorithm finds the classifier represented by the normal vector (w) and bias (b) of the hyperplane. This hyperplane (boundary) separates different classes by as wide a margin as possible. The problem can be converted into a constrained optimization problem:

begin{equation*}

begin{aligned}

& underset{w}{text{minimize}}

& & ||w||

& text{subject to}

& & y_i(w^T X_i-b) geq 1, ; i = 1, ldots, n.

end{aligned}

end{equation*}

A support vector machine (SVM) training algorithm finds the classifier represented by the normal vector and bias of the hyperplane. This hyperplane (boundary) separates different classes by as wide a margin as possible. The problem can be converted into a constrained optimization problem:

Kernel tricks are used to map a non-linearly separable functions into a higher dimension linearly separable function.

When the classes are not linearly separable, a kernel trick can be used to map a non-linearly separable space into a higher dimension linearly separable space.

When most dependent variables are numeric, logistic regression and SVM should be the first try for classification. These models are easy to implement, their parameters easy to tune, and the performances are also pretty good. So these models are appropriate for beginners.

Trees and ensemble trees

Decision trees, random forest and gradient boosting are all algorithms based on decision trees. There are many variants of decision trees, but they all do the same thing – subdivide the feature space into regions with mostly the same label. Decision trees are easy to understand and implement. However, they tend to over-fit data when we exhaust the branches and go very deep with the trees. Random Forrest and gradient boosting are two popular ways to use tree algorithms to achieve good accuracy as well as overcoming the over-fitting problem.

Neural networks and deep learning

A convolution neural network architecture (image source: wikipedia creative commons)

Neural networks flourished in the mid-1980s due to their parallel and distributed processing ability. But research in this field was impeded by the ineffectiveness of the back-propagation training algorithm that is widely used to optimize the parameters of neural networks. Support vector machines (SVM) and other simpler models, which can be easily trained by solving convex optimization problems, gradually replaced neural networks in machine learning.

In recent years, new and improved training techniques such as unsupervised pre-training and layer-wise greedy training have led to a resurgence of interest in neural networks. Increasingly powerful computational capabilities, such as graphical processing unit (GPU) and massively parallel processing (MPP), have also spurred the revived adoption of neural networks. The resurgent research in neural networks has given rise to the invention of models with thousands of layers.

In other words, shallow neural networks have evolved into deep learning neural networks. Deep neural networks have been very successful for supervised learning. When used for speech and image recognition, deep learning performs as well as, or even better than, humans. Applied to unsupervised learning tasks, such as feature extraction, deep learning also extracts features from raw images or speech with much less human intervention.

A neural network consists of three parts: input layer, hidden layers and output layer. The training samples define the input and output layers. When the output layer is a categorical variable, then the neural network is a way to address classification problems. When the output layer is a continuous variable, then the network can be used to do regression. When the output layer is the same as the input layer, the network can be used to extract intrinsic features. The number of hidden layers defines the model complexity and modeling capacity.

k-means/k-modes, GMM (Gaussian mixture model) clustering

- K Means Clustering

- Gaussian Mixture Model

Kmeans/k-modes, GMM clustering aims to partition n observations into k clusters. K-means define hard assignment: the samples are to be and only to be associated to one cluster. GMM, however, defines a soft assignment for each sample. Each sample has a probability to be associated with each cluster. Both algorithms are simple and fast enough for clustering when the number of clusters k is given.

DBSCAN

When the number of clusters k is not given, DBSCAN (density-based spatial clustering) can be used by connecting samples through density diffusion.

Hierarchical clustering

Hierarchical partitions can be visualized using a tree structure (a dendrogram). It does not need the number of clusters as an input and the partitions can be viewed at different levels of granularities (i.e., can refine/coarsen clusters) using different K.

PCA, SVD and LDA

We generally do not want to feed a large number of features directly into a machine learning algorithm since some features may be irrelevant or the “intrinsic” dimensionality may be smaller than the number of features. Principal component analysis (PCA), singular value decomposition (SVD), andlatent Dirichlet allocation (LDA) all can be used to perform dimension reduction.

PCA is an unsupervised clustering method that maps the original data space into a lower-dimensional space while preserving as much information as possible. The PCA basically finds a subspace that most preserve the data variance, with the subspace defined by the dominant eigenvectors of the data’s covariance matrix.

The SVD is related to PCA in the sense that the SVD of the centered data matrix (features versus samples) provides the dominant left singular vectors that define the same subspace as found by PCA. However, SVD is a more versatile technique as it can also do things that PCA may not do. For example, the SVD of a user-versus-movie matrix is able to extract the user profiles and movie profiles that can be used in a recommendation system. In addition, SVD is also widely used as a topic modeling tool, known as latent semantic analysis, in natural language processing (NLP).

A related technique in NLP is latent Dirichlet allocation (LDA). LDA is a probabilistic topic model and it decomposes documents into topics in a similar way as a Gaussian mixture model (GMM) decomposes continuous data into Gaussian densities. Differently from the GMM, an LDA models discrete data (words in documents) and it constrains that the topics are a priori distributed according to a Dirichlet distribution.

Conclusions

This is the work flow which is easy to follow. The takeaway messages when trying to solve a new problem are:

- Define the problem. What problems do you want to solve?

- Start simple. Be familiar with the data and the baseline results.

- Then try something more complicated.

SAS Visual Data Mining and Machine Learning provides a good platform for beginners to learn machine learning and apply machine learning methods to their problems. Sign up for a free trial today!

Artificial intelligence (AI), which has been around since the 1950s, has seen ebbs and flows in popularity over the last 60+ years. But today, with the recent explosion of big data, high-powered parallel processing, and advanced neural algorithms, we are seeing a renaissance in AI--and companies from Amazon to Facebook to Google are scrambling to take the lead. According to AI expert Roman Yampolskiy, 2016 was the year of 'AI on steroids,' and its explosive growth hasn't stopped.

While there are different forms of AI, machine learning (ML) represents today's most widely valued mechanism for reaching intelligence. Here's what it means.

SEE: Managing AI and ML in the enterprise (ZDNet special report) | Download the report as a PDF (TechRepublic)

Executive summary

- What is machine learning? Machine learning is a subfield of artificial intelligence. Instead of relying on explicit programming, it is a system through which computers use a massive set of data and apply algorithms to 'train' on--to teach themselves--and make predictions.

- When did machine learning become popular? The term 'artificial intelligence' was coined in the 1950s by Alan Turing. Machine learning became popular in the 1990s, and returned to the public eye when Google's DeepMind beat the world champion of Go in 2016. Since then, ML applications and machine learning's popularity have only increased.

- Why does machine learning matter? Machine learning systems are able to quickly apply knowledge and training from large data sets to excel at facial recognition, speech recognition, object recognition, translation, and many other tasks.

- Which industries use machine learning? Machine learning touches industries spanning from government to education to healthcare. It can be used by businesses focused on marketing, social media, customer service, driverless cars, and many more. It is now widely regarded as a core tool for decision making.

- How do businesses use machine learning? Business applications of machine learning are numerous, but all boil down to one type of use: Processing, sorting, and finding patterns in huge amounts of data that would be impractical for humans to make sense of.

- What are the security and ethical concerns about machine learning? AI has already been trained to bypass advanced antimalware software, and it has the potential to be a huge security risk in the future. Ethical concerns also abound, especially in relation to the loss of jobs and the practicality of allowing machines to make moral decisions like those that would be necessary in self-driving vehicles.

- What machine learning tools are available? Businesses like IBM, Amazon, Microsoft, Google, and others offer tools for machine learning. There are free platforms as well.

SEE: Managing AI and ML in the enterprise 2020: Tech leaders increase project development and implementation (TechRepublic Premium)

What is machine learning?

Machine learning is a branch of AI. Other tools for reaching AI include rule-based engines, evolutionary algorithms, and Bayesian statistics. While many early AI programs, like IBM's Deep Blue, which defeated Garry Kasparov in chess in 1997, were rule-based and dependent on human programming, machine learning is a tool through which computers have the ability to teach themselves, and set their own rules. In 2016, Google's DeepMind beat the world champion in Go by using machine learning--training itself on a large data set of expert moves.

There are several kinds of machine learning:

- In supervised learning, the 'trainer' will present the computer with certain rules that connect an input (an object's feature, like 'smooth,' for example) with an output (the object itself, like a marble).

- In unsupervised learning, the computer is given inputs and is left alone to discover patterns.

- In reinforcement learning, a computer system receives input continuously (in the case of a driverless car receiving input about the road, for example) and constantly is improving.

A massive amount of data is required to train algorithms for machine learning. First, the 'training data' must be labeled (e.g., a GPS location attached to a photo). Then it is 'classified.' This happens when features of the object in question are labeled and put into the system with a set of rules that lead to a prediction. For example, 'red' and 'round' are inputs into the system that leads to the output: Apple. Similarly, a learning algorithm could be left alone to create its own rules that will apply when it is provided with a large set of the object--like a group of apples, and the machine figures out that they have properties like 'round' and 'red' in common.

SEE: What is machine learning? Everything you need to know (ZDNet)

Many cases of machine learning involve 'deep learning,' a subset of ML that uses algorithms that are layered, and form a network to process information and reach predictions. What distinguishes deep learning is the fact that the system can learn on its own, without human training.

Additional resources

- Understanding the differences between AI, machine learning, and deep learning (TechRepublic)

- Video: Is machine learning right for your business? (TechRepublic)

Microsoft says AI and machine learning driven by open source and the cloud (ZDNet)

IBM Watson: The inside story of how the Jeopardy-winning supercomputer was born, and what it wants to do next (TechRepublic cover story)

Google AI gets better at 'seeing' the world by learning what to focus on (TechRepublic)

When did machine learning become popular?

Machine learning was popular in the 1990s, and has seen a recent resurgence. Here are some timeline highlights.

- 2011: Google Brain was created, which was a deep neural network that could identify and categorize objects.

- 2014: Facebook's DeepFace algorithm was introduced. The algorithm could recognize people from a set of photos.

- 2015: Amazon launched its machine learning platform, and Microsoft offered a Distributed Machine Learning Toolkit.

- 2016: Google's DeepMind program 'AlphaGo' beat the world champion, Lee Sedol, at the complex game of Go.

- 2017: Google announced that its machine learning tools can recognize objects in photos and understand speech better than humans.

- 2018: Alphabet subsidiary Waymo launched the ML-powered self-driving ride hailing service in Phoenix, AZ.

2020: Machine learning algorithms are brought into play against the COVID-19 pandemic, helping to speed vaccine research and improve the ability to track the virus' spread.

Additional resources

- Google announces 'hum to search' machine learning music search feature (TechRepublic)

Microsoft releases preview of Lobe training app for machine-learning (ZDNet)

Alibaba neural network defeats human in global reading test (ZDNet)

Why does machine learning matter?

Aside from the tremendous power machine learning has to beat humans at games like Jeopardy, chess, and Go, machine learning has many practical applications. Machine learning tools are used to translate messages on Facebook, spot faces from photos, and find locations around the globe that have certain geographic features. IBM Watson is used to help doctors make cancer treatment decisions. Driverless cars use machine learning to gather information from the environment. Machine learning is also central to fraud prevention. Unsupervised machine learning, combined with human experts, has been proven to be very accurate in detecting cybersecurity threats, for example.

SEE: All of TechRepublic's cheat sheets and smart person's guides

While there are many potential benefits of AI, there are also concerns about its usage. Many worry that AI (like automation) will put human jobs at risk. And whether or not AI replaces humans at work, it will definitely shift the kinds of jobs that are necessary. Machine learning's requirement for labeled data, for example, has meant a huge need for humans to manually do the labeling.

As machine learning and AI in the workplace have evolved, many of its applications have centered on assisting workers rather than replacing them outright. This was especially true during the COVID-19 pandemic, which forced many companies to send large portions of their workforce home to work remotely, leading to AI bots and machine learning supplementing humans to take care of mundane tasks.

There are several institutions dedicated to exploring the impact of artificial intelligence. Here are a few (culled from our Twitter list of AI insiders).

- The Future of Life Institute brings together some of the greatest minds--from the co-founder of Skype to professors at Harvard and MIT--to explore some of the big questions about our future with machines. This Cambridge-based institute also has a stellar lineup on its scientific advisory board, from Nick Bostrom to Elon Musk to Morgan Freeman.

- The Future of Humanity Institute at Oxford is one of the premier sites for cutting-edge academic research. The FHI Twitter feed is a wonderful place for content on the latest in AI, and the many retweets by the account are also useful in finding other Twitter users who are working on the latest in artificial intelligence.

- The Machine Intelligence Research Institute at Berkeley is an excellent resource for the latest academic work in artificial intelligence. MIRI exists, according to Twitter, not only to investigate AI, but also to 'ensure that the creation of smarter-than-human intelligence has a positive impact.'

Additional resources

- IBM Watson CTO: The 3 ethical principles AI needs to embrace (TechRepublic)

- Forrester: Automation could lead to another jobless recovery (TechRepublic)

- Machine learning helps science tackle Alzheimer's (CBS News)

Which industries use machine learning?

Just about any organization that wants to capitalize on its data to gain insights, improve relationships with customers, increase sales, or be competitive at a specific task will rely on machine learning. It has applications in government, business, education--virtually anyone who wants to make predictions, and has a large enough data set, can use machine learning to achieve their goals.

SEE:Sensor'd enterprise: IoT, ML, and big data (ZDNet special report) | Download the report as a PDF (TechRepublic)

Along with analytics, machine learning can be used to supplement human workers by taking on mundane tasks and freeing them to do more meaningful, innovative, and productive work. Like with analytics, and business that has employees dealing with repetitive, high-volume tasks can benefit from machine learning.

Additional resources

- The 6 most in-demand AI jobs, and how to get them (TechRepublic)

- Cheat sheet: How to become a data scientist (TechRepublic)

- MIT's automated machine learning works 100x faster than human data scientists (TechRepublic)

- Apple, IBM add machine learning muscle to enterprise iOS pact (ZDNet)

How do businesses use machine learning?

2017 was a huge year for growth in the capabilities of machine learning, and 2018 set the stage for explosive growth that, by early 2020, found that 85% of businesses were using some form of AI in their deployed applications.

One of the things that may be holding that growth back, Deloitte said, is confusion--just what is machine learning capable of doing for businesses?

There are numerous examples of how businesses are leveraging machine learning, and all of it breaks down to the same basic thing: Processing massive amounts of data to draw conclusions much faster than a team of data scientists ever could.

Some examples of business uses of machine learning include:

- Alphabet-owned security firm Chronicle is using machine learning to identify cyberthreats and minimize the damage they can cause.

- Airbus Defense & Space is using ML-based image recognition technology to decrease the error rate of cloud recognition in satellite images.

- Global Fishing Watch is fighting overfishing by monitoring the GPS coordinates of fishing vessels, which has enabled them to monitor the whole ocean at once.

- Insurance firm AXA raised accident prediction accuracy by 78% by using machine learning to build accurate driver risk profiles.

- Japanese food safety company Kewpie has automated detection of defective potato cubes so that workers don't have to spend hours watching for them.

Yelp uses deep learning to classify photos people take of businesses by certain tags.

MIT's OptiVax can develop and test peptide vaccines for COVID-19 and other diseases in a completely virtual environment with variables including geographic coverage, population data, and more.

SEE: Executive's guide to AI in business (free ebook) (TechRepublic)

Machine Learning Cheat Sheet Pdf

Any business that deals with big data analysis can use machine learning technology to speed up the process and put humans to better use, and the particulars can vary greatly from industry to industry.

AI applications don't come first--they're tools used to solve business problems, and should be seen as such. Finding the proper application for machine learning technology involves asking the right questions, or being faced with a massive wall of data that would be impossible for a human to process.

Additional resources

- 5 tips to overcome machine learning adoption barriers in the enterprise (TechRepublic)

- How the NFL and Amazon unleashed 'Next Gen Stats' to grok football games (TechRepublic)

- Robot boats from MIT can now carry passengers (TechRepublic)

- Predictive analytics: A cheat sheet (TechRepublic)

- How ML and AI will transform business intelligence and analytics (ZDNet)

- Zoom meetings: You can now add live captions to your call – and they actually work (ZDNet)

- AI and the Future of Business (ZDNet special feature)

- How to launch a successful AI startup (TechRepublic)

- The practical applications of AI: 6 videos (TechRepublic)

What are the security and ethical concerns about machine learning?

There are a number of concerns about using machine learning and AI, including the security of cloud-hosted data and the ethical considerations of self-driving cars.

From a security perspective, there are always concerns about the theft of large amounts of data, but security fears go beyond how to lock down data repositories.

Security professionals are nearly universally concerned about the potential of AI to bypass antimalware software and other security measures, and they're right to be worried: Artificial intelligence software has been developed that can modify malware to bypass AI-powered antimalware platforms.

Several tech leaders, like Elon Musk, Stephen Hawking, and Bill Gates, have expressed worries about how AI may be misused, and the importance of creating ethical AI. Evidenced by the disaster of Microsoft's racist chatbot, Tay, AI can go wrong if left unmonitored. Hdr darkroom 3 serial.

SEE: Machine learning as a service: Can privacy be taught? (ZDNet)

Ethical concerns abound in the machine learning world as well; one example is a self-driving vehicle adaptation of the trolley problem thought experiment. In short, when a self-driving vehicle is presented with a choice between killing its occupants or a pedestrian, which is the right choice to make? There's no clear answer with philosophical problems like this one--no matter how the machine is programmed, it has to make a moral judgement about the value of human lives.

Deep fake videos, which realistically replace one person's face and/or voice with someone else's based on photos and other recordings, have the potential to upset elections, insert unwilling people into pornography, and otherwise insert individuals into situtations they aren't okay with. The far-reaching effects of this machine learning-powered tool could be devastating.

Along with whether giving learning machines the ability to make moral decisions is correct, or whether access to certain ML tools is socially dangerous, there are issues of the other major human cost likely to come with machine learning: Job loss.

If the AI revolution is truly the next major shift in the world, there are a lot of jobs that will cease to exist, and it isn't necessarily the ones you'd think. While many low-skilled jobs are definitely at risk of being eliminated, so are jobs that require a high degree of training but are based on simple concepts like pattern recognition.

Radiologists, pathologists, oncologists, and other similar professions are all based on finding and diagnosing irregularities, something that machine learning is particularly suited to do.

There's also the ethical concern of barrier to entry--while machine learning software itself isn't expensive, only the largest enterprises in the world have the vast stores of data necessary to properly train learning machines to provide reliable results.

As time goes on, some experts predict that it's going to become more difficult for smaller firms to make an impact, making machine learning primarily a game for the largest, wealthiest companies.

Machine Learning Cheat Sheet Python

Additional resources

Computer Learning Cheat Sheets

- Why AI bias could be a good thing (TechRepublic)

- Google AI executive sees a world of trillions of devices untethered from human care (ZDNet)

- AI and ethics: One-third of executives are not aware of potential AI bias (TechRepublic)

- Artificial data reduces privacy concerns and helps with big data analysis (TechRepublic)

- Can AI really be ethical and unbiased? (ZDNet)

- 3 ways criminals use artificial intelligence in cybersecurity attacks (TechRepublic)

- Artificial Intelligence: Legal, ethical, and policy issues (ZDNet)

What machine learning tools are available?

There are many online resources about machine learning. To get an overview of how to create a machine learning system, check out this series of YouTube videos by Google Developer. There are also classes on machine learning from Coursera and many other institutions.

And to integrate machine learning into your organization, you can use resources like Microsoft's Azure, Google Cloud Machine Learning, Amazon Machine Learning, IBM Watson, and free platforms like Scikit.

Machine Learning Algorithms

Additional resources

Azure Machine Learning Cheat Sheet

- Amazon unveils dozens of machine learning tools (TechRepublic)

- Microsoft offers developers pre-built machine learning models for Windows 10 apps (TechRepublic)

- Cloud AutoML: How Google aims to simplify the grunt work behind AI and machine learning models (ZDNet)

- Amazon AI: Cheat sheet (TechRepublic)

- AI investment increased during the pandemic, and many business plan to do more, Gartner found (TechRepublic)

- Facebook's machine learning director shares tips for building a successful AI platform (TechRepublic)

- AI helpers aren't just for Facebook's Zuckerberg: Here's how to build your own (TechRepublic)

- How developers can take advantage of machine learning on Google Cloud Platform (TechRepublic)

- How to prepare your business to benefit from AI (TechRepublic)

Machine Learning Cheat Sheet Microsoft

Sony mz r37. Editor's note: This article was updated by Brandon Vigliarolo.